Technology

Bryan Mamaril & Adi Azulay

Bryan Mamaril

Associate Director, UX Design

Adi Azulay

Interaction Designer

AI image generators have commanded global attention with the virality of images of Pope Francis as a fashion icon, to Queen Elizabeth doing her own laundry.

The images in this article were created in Midjourney, which is capable of creating stunning, realistic visuals. And while many people have signed up to use the app, they quickly realize that getting results is easy, but getting good results is much harder—did you notice the header image of this article features two hands, one with four fingers and the other with six? Getting usable imagery out of tools like Midjourney requires a lot of trial-and-error, money, and research, and the learning curve is high for new users.

Companies like Adobe and Microsoft are trying to bring AI image generation to the masses with more accessible and free options, and while they are easier to use, there’s still a significant learning curve. Additionally, many people also don’t trust AI. It’s difficult—or impossible—to discern where the images came from, how they were generated, or whether they’re safe to use personally or professionally.

Given these challenges, our team was inspired to create a concept for an AI image generator that is easier to use, helps users understand what went into making the image, and gives them tools to assess whether that image is right for them.

Because of the tech behind image generators, image generator users have to open the tools knowing exactly what they're looking for and how to describe it precisely. High-confidence users thrive well here using a lot of experimentation and outside research. For example, an expert prompt can get as detailed as this:

“photo of an extremely cute alien fish swimming an alien habitable underwater planet, coral reefs, dream-like atmosphere, water, plants, peaceful, serenity, calm ocean, transparent water, reefs, fish, coral, inner peace, awareness, silence, nature, evolution --version 3 --s 42000 --uplight --ar 4:3 --no text, blur”

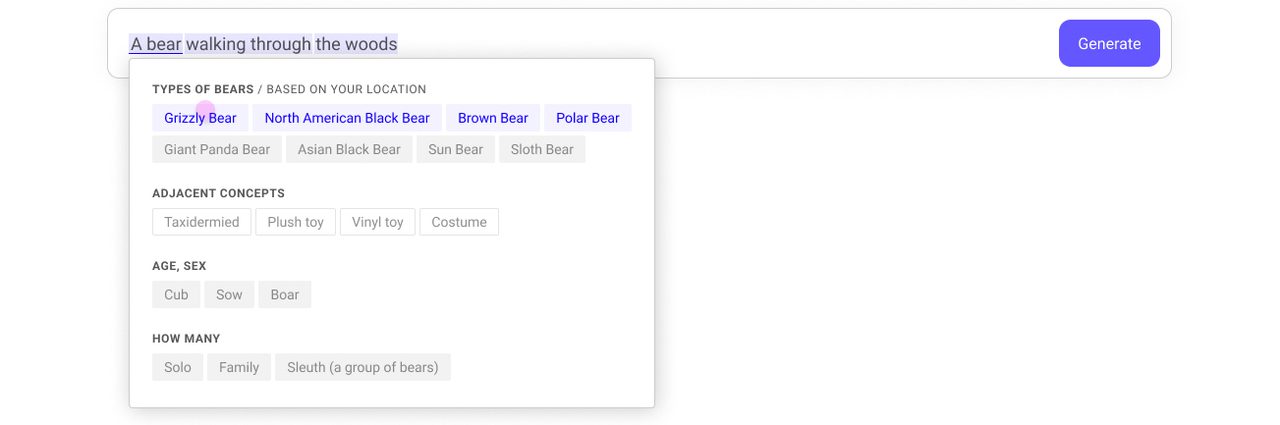

But more novice users struggle beyond the suggested starting point of what, where, style. Given that auto-suggest is a familiar experience in search engines, we decided to give our conceptual AI tool a similar approach to image prompting. For example, if a user types in “bear,” the tool could recognize that term and surface the top three examples of things that bears do.

When the user finishes typing a prompt, they could click on an entity and see a pop-up of additional details to add. This helps expose new categories of criteria to further refine the image.

Results could become more relevant by using the user’s location. For example, knowing that the user is located in Seattle, AI could identify which species have habitat closer to that region, and place them higher up in the suggestions.

For example, having selected “grizzly bear,” when the user selects a location, the tool would surface more contextually relevant info such as a grizzly bear’s habitat. All of this happens before officially generating an image, which makes it more efficient to create the desired image.

This feature also presents opportunities for micro-learning—for example, it could share that a group of bears is called a “sleuth”. This way, users are afforded more context and knowledge about the things they are creating, and the image generator could support the user in creating the content of the image, instead of just executing the final image.

Numerous calls for regulation have popped up due to fears of the power of AI superintelligence, as well as the potential impact of deepfakes on politics and national security. Industry leaders are calling for government regulation, including the creation of new federal agencies. Organizations such as the Content Authenticity Initiative are promoting adoption of industry standards to be able to determine whether AI was used to create or alter certain images.

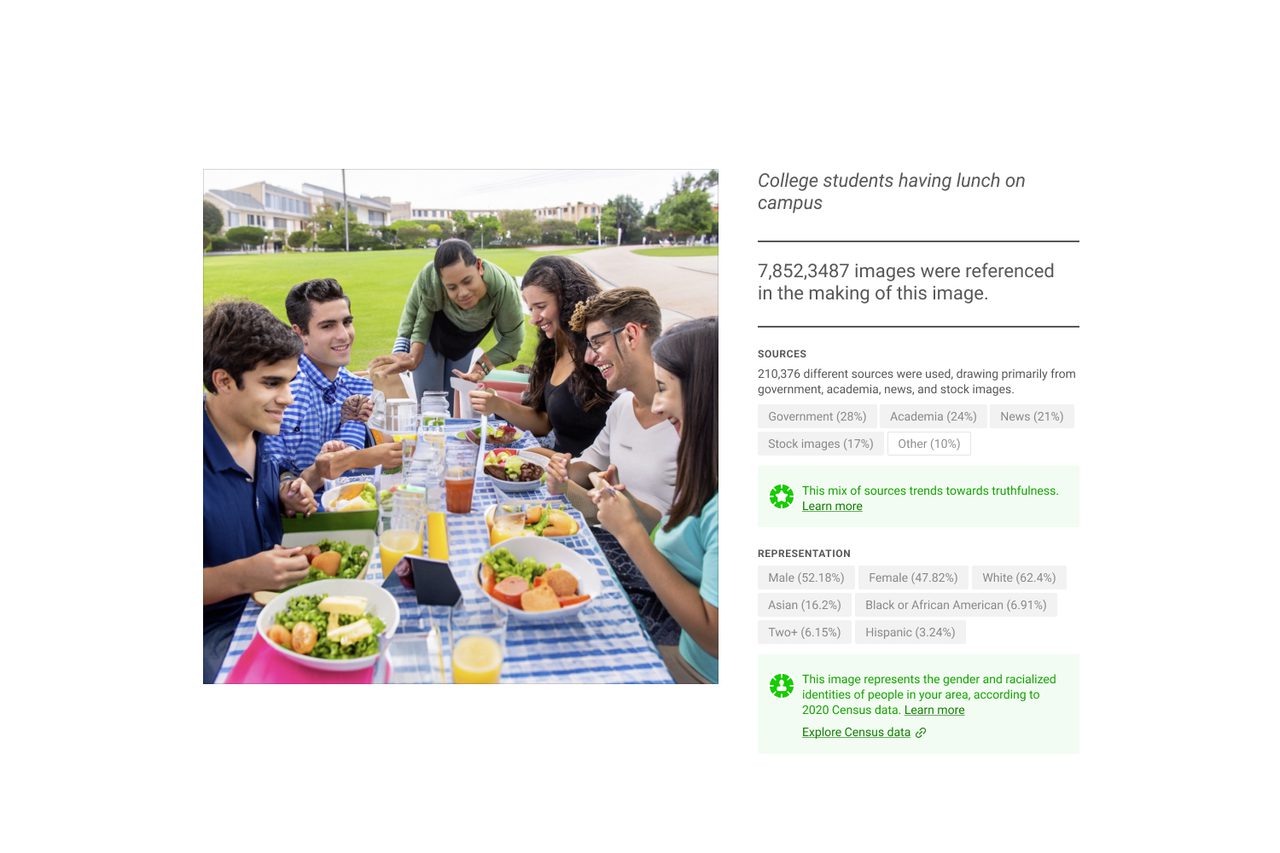

Federal laws require manufacturers to list ingredients in food labels, with major allergens called out and clearly labeled. This helps consumers know that their food won’t kill them or their loved ones, and empowers them to decide if they want to eat it.

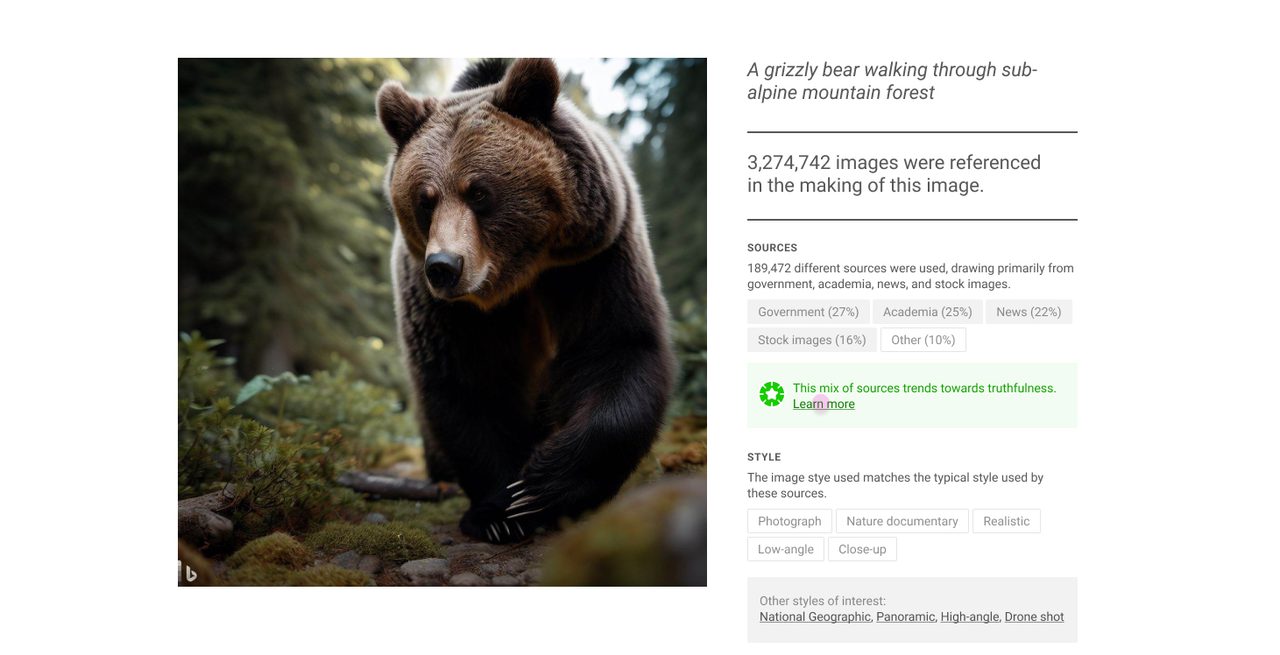

In a similar way, a basic set of standards of “nutrition facts” and sources for AI image generators could be established to build trust among users. This transparency could empower people to better understand what went into making a particular image, and to decide if this is the right image to use.

Image details could tell you the total number of images referenced, and how many different sources were used. This communicates that the broad range of sources sampled could mitigate the risk of an image being biased towards any one person’s art, likeness, or idea.

The kinds of sources are important too. In this example, 90% of the sources were from government, academia, news, and stock images, sources that are more likely to “trend toward truthfulness,” or tend to depict bears in ways we’re accustomed to. On the other hand, if a generated image was informed more by social media, and blogs, there might be more liberties taken with those images, and it should be marked accordingly. Simply knowing where images were sampled can go a long way in making users more comfortable.

Style is a huge part of the image generation process and many novices don’t know why certain styles are used for certain subjects, or what things go into a style at all. The ingredients list concept can also help users understand what descriptions make up the style they’re seeing. Within the menu, the user might experiment with “high-angle” versus “low-angle” options, or “wide shot” instead of “close-up.” Exposing more of the range of choices the system makes could reduce the trial-and-error most users experience.

Using census data, the tool could also help users select images of people that better represent the gender and racialized identities of a particular area. The image generator could automatically analyze the output to cross-check if it’s representative of that area’s demographics.

AI image generators are already changing the creative process. If our concept were to be made real, it would represent a more powerful, transparent, and responsible AI image generator. As AI will only continue to face greater scrutiny the more it is implemented in daily life, it would be wise for industry leaders to approach transparency and trust from every angle.