Technology

Clint Rule | Principal Director, Design

Clint Rule

Clint is a supportive guide for creative teams, a champion of human-centered design, and collaborative partner to clients across industries.

The hand axe—humanity’s first tool and perhaps our longest-running invention—reminds us of a fundamental truth: control is a feedback loop. The hand axe works because it’s responsive. A swing meets resistance; sound and tactile feedback guide the next move. This interaction loop, ancient yet enduring, anchors how we design systems today, no matter their complexity.

But what happens when control moves further from our hands, when distance, latency, or sheer computational power demands we rethink how humans and machines collaborate? Enter shared autonomy, a design frontier redefining trust, ethics, and even the notion of human valor.

To understand this new frontier, we must explore the evolving levels of control—from direct control to programmed autonomy to shared autonomy—and uncover the unique challenges and opportunities each brings.

The simplicity of direct control—press a button, steer a vehicle—relies on rich, immediate feedback. But direct control falters at a distance. Take NASA’s Psyche spacecraft, 20 million miles away, where communication delays make the once-intuitive feedback loop sluggish and incomplete. Even closer to Earth, challenges like human fatigue or task complexity reveal the limitations of direct control, paving the way for programmed autonomy.

Direct control thrives on an instantaneous, rich input-feedback loop. Tactile resistance or sound guides users to adjust in real time, enhancing precision and intuition.

Programmed control thrives in tidy, structured environments. Picture a child’s toy robot executing a sequence of commands—a simple, repeatable loop that mirrors systems like NASA’s Jet Propulsion Lab’s mission planners or enterprise-level no-code platforms.

Yet complexity strains programmed systems. When faced with dynamic, unpredictable conditions, these systems risk brittleness. Unforeseen variables compound, introducing failures where human intuition once filled the gaps.

The Cozmo robot toy exemplifies programmatic control: empowering operators with low-code/no-code interfaces that feel like task management tools—no engineering expertise required.

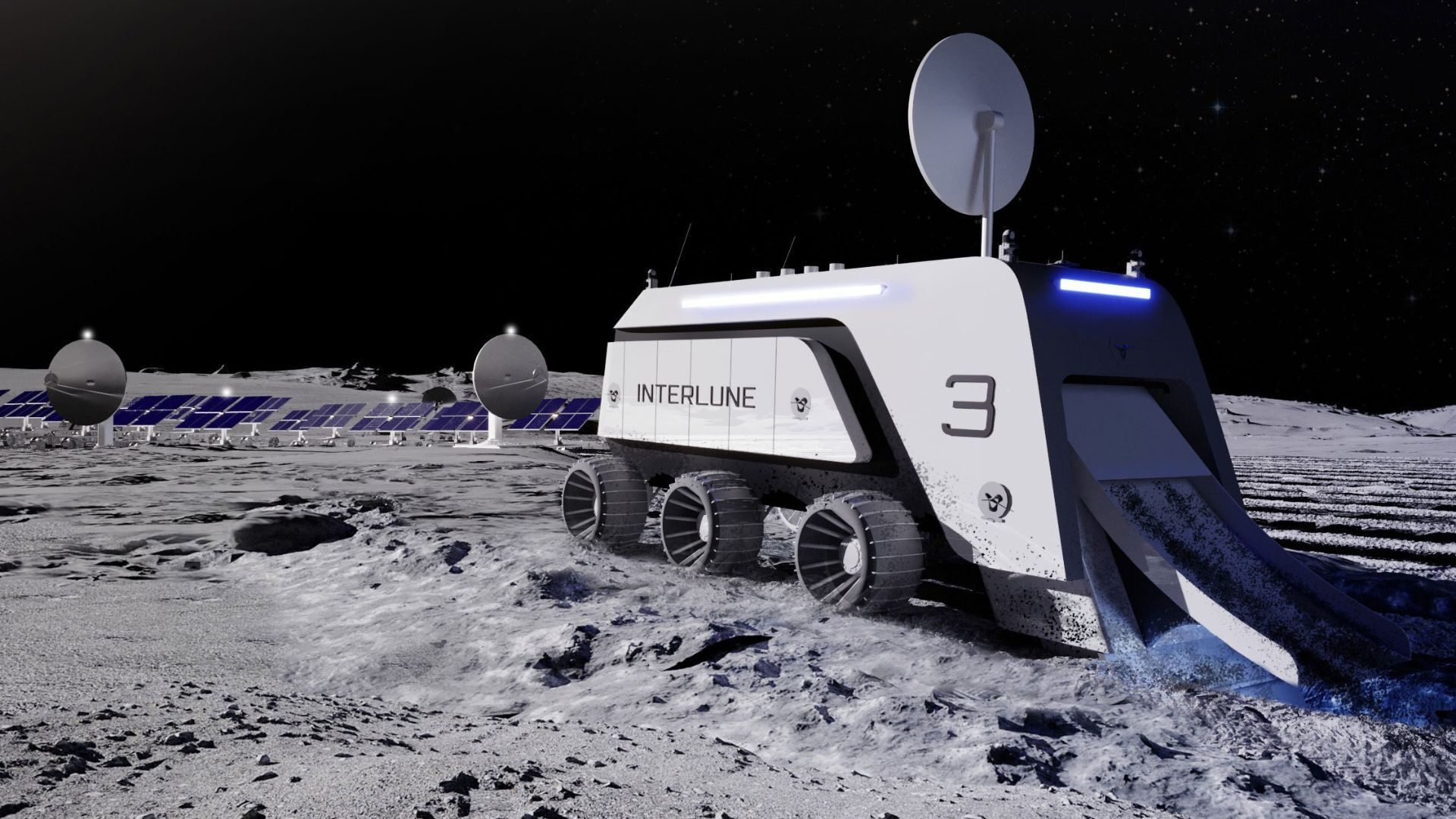

The answer lies in shared autonomy, where humans set objectives, and intelligent agents dynamically execute tasks. NASA’s Perseverance rover exemplifies this: over 80% of its planetary navigation relies on autonomous decision-making. But autonomy introduces a new challenge—trust.

Shared control is only effective when the human side of the equation feels empowered and informed.

This trust challenge was evident in Teague’s work with Overland AI, a company developing off-road autonomous vehicle systems for defense applications. As their design partner, Teague created a user interface for Overwatch, Overland AI’s control software. A key feature of this interface was the "cost map," a visual tool that displays the obstacles a vehicle detects and how it assesses the risk of navigating its terrain.

For operators, the cost map serves as a window into the system’s logic. By revealing how the robot interprets its environment and makes decisions, the interface builds trust. Operators can empathize with the system’s “thinking,” which is critical for ensuring confidence in the machine’s autonomy. This collaboration highlighted a core truth: shared control is only effective when the human side of the equation feels empowered and informed.

The Perseverance rover showcases shared control, setting records with 700m of autonomous travel on Mars, where 85% of navigation relies on AI systems. Operators set objectives, while the rover independently determines how to achieve them.

Shared autonomy doesn’t just reshape technical processes; it redefines human roles. Trust, ethics, and even camaraderie come into play as we delegate control to non-human agents. Traditional notions of leadership, ingenuity, and responsibility must evolve to account for the growing partnership between humans and machines.

Shared autonomy doesn’t just reshape technical processes; it redefines human roles. Trust, ethics, and even camaraderie come into play as we delegate control to non-human agents.

In this shift, the spotlight broadens. The teams designing, coding, and testing autonomous systems share recognition with the systems themselves. What once centered on individual brilliance now transforms into collective ingenuity, celebrating the collaboration between humans and machines.

Shared autonomy is not just a technological milestone; it’s a cultural one. It challenges us to rethink control, celebrate the interplay between humans and machines, and bridge the trust gap with thoughtful design. As we hand over more responsibility to autonomous systems, the question isn’t whether they’ll succeed—it’s how we, as their human counterparts, will evolve alongside them.

This is the next chapter of design: where tools are no longer extensions of us but collaborators in a shared loop of control, trust, and progress.